Pierre-Yves Oudeyer

Artificial Intelligence, Machine Learning, Cognitive Science

Human-Robot Interaction

Social learning and human-robot interaction

In order to learn efficiently from interactions with humans who are not engineers, robots do not only need sophisticated learning and perceptual algorithms: interaction design and adaptive interfaces are essential. Indeed, robots eventually learn based on the training data they collect through social human-robot interaction. Thus, the quality of this data is crucial. The vision that explored here is that considerable learning efficiency can be gained by designing and using adequate human-robot interfaces, i.e. interfaces that are both easy to learn by the human, easy to use, and constrain the interaction so that the robot gets seemlessly high-quality training examples from a non-expert user. To achieve this target, it is in particular of high-importance that interfaces and human-robot interaction protocols become themselves adaptive, so that the robot can learn how each human user teachs and interacts.

Inspired by the properties of infant-adult interactions that allow little humans to efficiently learn and develop through social interactions, the stragegy we use is as follows: 1) We try to take functionalinspiration from the constraints that make infant-adult human interactions both efficient and natural; 2) Because those constraints are importantly dependant on the embodiment, and because robots have a different sensorimotor apparatus than humans, we do not necessarily try to copy/mimic human-human interactions and allow ourselves to introduce novel artifacts as mediators facilitating the joint attentional and intentional understanding between robots and humans; We also consider that embodied direct physical interaction, as well as low-level emotional interaction can produce interactions that are both more robust and more intuitive than “traditional” interaction modes using language processing of visual gesture interpretation.

Finally, in the framework of this methodological approach, we consider the technical problem of joint attention and joint intention as the essential problem to be addressed. Joint attention and joint intention have been identified as key processes which allow the bootstrapping of social learning in humans. Human children were shown to have innate constraining mechanisms providing them with those abilities. This should be also the case in robots. If one wants human-robot teaching interactions to be fluid, intuitive, and at the same time provide good quality training data to the robot, two properties should be realized: 1) the robot should perceive easily and robustly both what the human is attending to, and understand what is his current intention; 2) symmetrically, the human should perceive easily and robustly what the robot is attending to and what is his internal state – the robot should be as transparent as possible.

The projects below illustrate how this vision, this approach and this specific difficult technical problem are addressed through the introduction of mediators such as Iphones, laser pointers or clickers for teaching new words, new visual objects or new motor tricks to a robot, as well as through the use of morphological computation allowing a human to physically drive a robot by the hand, and through the elaboration of emotional speech synthesis allowing to convey information about the internal state naturally and without any training for the human.

Keywords: human-robot interfaces, human-robot interaction, robot learning, language acquisition, clicker-training, motor learning, joint attention, joint intention, Iphone, laser pointer, physical HRI, emotional speech synthesis.

Direct link to: HRI for language teaching with the Iphone – Physical HRI for driving a humanoid by the hand – Emotional speech synthesis in HRI – HRI for motor teaching with clicker training.

![]()

Human-robot interfaces for teaching new visually grounded words to a robot

In this project, conducted by Pierre Rouanet (PhD student in the FLOWERS team) and myself, we designed and studied various human-robot interfaces allowing non-expert users to teach new words to their robot. In opposition to most of existing works in this area which focus on the associated visual perception and machine learning challenges, we choose to focus on the HRI challenges with the aim to show that it can improve significantly the learning quality. We argue that by using mediator objects and in particular a handheld device such as an Iphone, we can develop a human-robot interface which is not only intuitive and entertaining but will also “help” the user to provide “good” learning examples to the robot and thus will improve the efficiency of the whole learning system. The perceptual and machine learning parts of this system rely on an incremental version of visual bag-of-words, augmented with a system called ASMAT that makes it possible for the robot to incrementally build a model of a novel unknown object by simultaneously modelling and tracking it and elaborated with David Filliat.

The video below illustrates practically some of the challenges posed by joint attention and joint intention in such language teaching interactions. Then, it shows two interfaces we have designed: 1) one interface using an Iphone which allows on the one hand the human to continuously monitor what the robots sees (or does not see), and on the other hand to provide easy to understand intentions and signals to the robot through simple gestures on the touch screen; 2) a second interferface using a laser pointer and a Wiimote, addressing differently the same challenge. These interfaces, as well as some other ones which we used for comparison, were designed to be easy to use, especially by non-expert domestic users, and to span different metaphors of interaction. They also provide different kinds of feedback of what the robot is perceiving.

In order to achieve robust and real-world evaluation of these concepts and interfaces, together with Fabien Danieau, we set up and ran several pilot studies and finally a large-scale user study, with more than one hundred visitors of a public museum in Bordeaux (Cap Sciences) and over a period of 25 days. This study was designed as a robotic game in order to maintain the user’s motivation during the whole experiment. Among the 4 interfaces compared, 3 were based on mediator objects such as an iPhone, a Wiimote and a laser pointer. The fourth interface was a gesture based interface with a Wizard-of-Oz recognition system added to compare our mediator interfaces with a more natural interaction. Here, we specially studied the impact the interfaces have on the quality of the learning examples and the usability. We showed that providing non-expert users with a feedback of what the robot is perceiving is essential if one is interested in robust interaction. In particular, the iPhone interface allowed non-expert users to provide better learning examples due to its whole visual feedback. This study was also an opportunity to explore how robotic games, analogous to video games, could be elaborated and what kind of reactions it triggered in the general public. >

References

Rouanet, P., Oudeyer, P-Y., Danieau, F., Filliat, D. (2013) The Impact of Human-Robot Interfaces on the Learning of Visual Object, IEEE Transactions on Robotics, 29(2), pp. 525-541. Bibtex

![]()

Physical human-robot interaction with Acroban: Emergent human-robot interfaces with morphological computation

In this project, together with Olivier Ly, we study how morphology and materials can drastically simplify, even self-organize, complex physical human-robot interaction with a biped humanoid robot. The experimental platform is the robot Acroban, which is at the same time capable of complex compliant motor skills, rich, robust and playful whole-body physical interaction with humans, and yet based on standard affordable components. This is made possible by the combination of adequate morphology (a bio-inspired vertebral column) and materials, full-body compliance, semi-passive and self-organized stable dynamics, as well as the possibility to experiment new motor primitives by trial-and-error thanks to light-weightedness. We have shown how a complex analogical human-robot interface allowing a human to “lead the robot by the hand” can spontaneously emerge thanks to morphological computation. Finally, we are studying the strong positive emotional reactions that Acroban triggers, with children in particular, in spite of its metallic non-roundish visual appearance, and we propose the hypothesis of the “Luxo Jr. Effect”.

The following video illustrates how humans (even children) can take the hand of Acroban and drive himto walk in any direction intuitively. Here, the robot only uses generic motor primitives for autonomous stabilization and balancing, tuned such that the robot alone does not walk forward by itself. There is not a single line of code in the system that tells the robot to follow the human if taken by the hand. When external forces, due to a human taking its hands, are applied to the robot (even weak), this slightly change the morphology/distribution of weight of the robot, and spontaneously the robot follows the human. Here, “morphological computation” allows the robot to replace the complicated computational/algorithmic mechanisms that may be used in more traditional approaches for inferring the intention of the human.

The video below illustrates the “Luxo Jr. effect”. Acroban provokes spontaneous highly positive emotional reactions, especially in children. Yet, as opposed to many other robots, its morphology is neither roundish nor cute. He has no big eyes. He is just made of metal, and its appearance shows it explicitly. At first glance, its visual appearance creates low expectation of intelligence and life-likeness. But when it begins to move and one can touch it, its natural dynamics, much more life-like than most other robots, triggers a high contrast and positive surprise. Life unexpectedly appears out of a neutral metallic object, much as Pixar’s Luxo Jr. This is why we call it the Luxo Jr. effect.

Reference:

Ly, O., Lapeyre, M., Oudeyer, P-Y. (2011) Bio-inspired vertebral column, compliance and semi-passive dynamics in a lightweight robot, in Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS 2011), San Francisco, US.

Oudeyer, P-Y., Ly, O., Rouanet, P. (2011) Exploring robust, intuitive and emergent physical human-robot interaction with the humanoid Acroban, in the Proceedings of IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia. Bibtex

Ly, O., Oudeyer, P-Y. (2010) Acroban the humanoid: Playful and compliant physical child-robot interaction, in ACM SIGGRAPH 2010 Emerging Technologies. (more videos here). Bibtex

![]()

Emotional speech synthesis and recognition

Humans use a lot prosodic information to convey information about their internal emotional and mental states. This information is essential in the efficient coordination of social interaction and is very useful for joint understanding. In this project, I have investigate how robots might be able to use this modality in the course of social interaction with humans.

First, I have been working on algorithms for emotional speech synthesis. The objective was to manipulate the prosody of computer generated speech signals so that a human listener can perceive different kinds of emotions or attitudes, such as happiness, sadness or anger. The algorithms that I developped were inspired by psychoacoustic studies but in no way tryed to reproduce precisely the way humans modulate their prosody to express emotions. Rather, I developed operators for prosodic deformation which are analogous to the deformation of faces in Walt Disney pictures used to express visually the emotions of characters, and in order to avoir the so-called uncanny effect. More information is available here.

Second, I have conducted one of the first large-scale data mining experiment for the task of recognizing basic emotions in unformal everyday short utterances (see article below). This work was focused on the speaker dependant problem. I compared a large set of machine learning algorithms, ranging from neural networks, Support Vector Machines or decision trees, together with 200 features, using a large database of several thousands examples. The difference of performance among learning schemes can be substantial, and some features which were precedendtly unexplored are of crucial importance. An optimal feature set was derived through the use of a genetic algorithm.

Selected article:

Oudeyer P-Y. (2003) The production and recognition of emotions in speech: features and algorithms,International Journal in Human-Computer Studies , 59(1-2), pp. 157–183, special issue on Affective Computing. Bibtex

![]()

Interaction protocols for language acquisition in robots

How can a human teach new words to a robot in a natural manner? What social regulation mechanisms are needed for robust and intuitive interaction? What are the necessary technological requirements?

These are the questions investigated in this project. The video above shows an example of experiment which was built to study how one can implement flexible and natural interaction protocols based on speech synthesis and recognition and how one can actually realize a primitive form of joint attention with robots. In particular, we used a system of visual tags to augment the robot’s reality so that it can accurately perceive the objects around it, coupled with a behavioural system that allows the human to naturally understand what the robot is paying attention to.

Selected publications:

Lopes, M., Cederborg, M., Oudeyer, P-Y. (2011) Simultaneous Acquisition of Task and Feedback Models, in proceedings of the IEEE International Conference on Development and Learning (ICDL-Epirob), Frankfurt, Germany. Bibtex

Kaplan, F., Oudeyer, P-Y., Bergen B. (2008) Computational Models in the Debate over Language Learnability,Infant and Child Development, 17(1), pp. 55–80. Bibtex

Kaplan F. and Oudeyer P-Y. (2006) Un robot curieux, Pour La Science, no. 348, octobre 2006.

![]()

Human-robot interfaces for motor learning with clicker-training

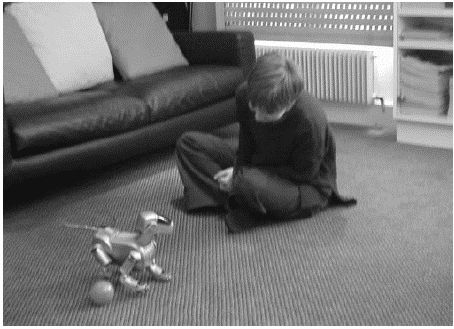

Can we train robot to do tricks the same way people train dogs or dolphins with clicker training ?

Together with Frédéric Kaplan and Eniko Kubinyi, we developed in this project the idea that some techniques used for animal training might be helpful for solving human robot interaction problems in the context of entertainment robotics. We presented a model and a human-robot interface for teaching complex actions to an animal-like autonomous robot based on ”clicker training”, i.e. using a mediator object allowing the robot to understand efficiently the teaching signals coming from the human. This method is used efficiently by professional trainers for animals of different species. After describing our implementation of clicker training on an enhanced version of AIBO, Sony’s four-legged robot, we showed that this new method can be a promising technique for teaching unusual behavior and sequences of actions to a pet robot.

Selected publications:

Kaplan, F., Oudeyer, P-Y., Kubinyi, E. and Miklosi, A. (2002) Robotic clicker training. Robotics and Autonomous Systems, 38(3-4), pp. 197–206. Bibtex